This post documents a method to create an end-to-end pipeline which allows us to automatically deploy application to a Kubernetes cluster following a change in the source code. We helped one of our clients, Infotel UK, to set it up, so they can start the delivery of their software in a rapid and streamlined way. The proposed solution is hosted in Amazon Web Services but an equivalent setup should be possible in all major cloud providers including Microsoft Azure and Google Cloud Platform. The one described below uses AWS CodeCommit, CodePipeline, Elastic Code Repository (ECR), CodeBuild and Elastic Kubernetes Service (EKS). The application we wanted to deploy is a simple “Hello World” web service written in Python Flask.

The approach presented below is a slightly different solution to the one proposed by Amazon in one of their blogs. Firstly, we do not rely on a lambda function to run the deployment action. Secondly, we tag Docker images built by the pipeline following the tags of the git repository. Additionally, you get the complete code base required to run our example.

Fig. 1 Overview of the AWS tools used in our continuous deployment solution.

The final outcome of this tutorial works as follows:

- You commit (and optionally tag) your code into the git repository on CodeCommit

- The commit triggers a pipeline to build and deploy code.

- The pipeline runs a build project on CodeBuild.

- The build project coordinates tagging (4a), storing (4b) and deployment (4c) of Docker images.

Prerequisites

To run this tutorial we assume your basic knowledge of Git, Docker, Kubernetes and Python. You will also need:

- access to an AWS account, and

- a machine with installed: git client and Docker.

Application: Hello World

We start this tutorial with a containerized version of a simple application written in Python. From Flask web page we take Hello World example and build a Docker image which we will then deploy to Kubernetes on AWS.

The code of the web application is as follows:

hello_world.py:

from flask import Flask, escape, request

app = Flask(__name__)

@app.route('/')

def hello():

name = request.args.get("name", "World")

return f'Hello, {escape(name)}!'

Let us save it to hello_world.py.

As the application needs the Flask package, which is not in the standard Python library, we create file requirements.txt with a single line indicating the required library:

requirements.txt:

Flask

Then, we will need a Dockerfile that contains all the commands needed to assemble a Docker image of our application.

Dockerfile:

FROM python:3

WORKDIR /opt/hello_world

COPY requirements.txt ./

RUN pip install --no-cache-dir -r requirements.txt

ENV FLASK_APP=hello_world.py

COPY hello_world.py .

EXPOSE 5000

CMD [ "flask", "run", "-h", "0.0.0.0" ]

In the file above we:

- [line 1] use

python:3as the baseline image which provides the runtime environment for our application, - [line 3] set working directory to

/opt/hello_world; this is the place where you can find the application in containers created of this image, - [line 5–6] install packages required to run the application; only

Flaskin this case, - [line 8–9] configure environment and copy the application file, so

Flaskis able to start it, - [line 11] expose port

5000– the default port whichFlaskuses to run web applications - [line 13] configure the execution command for the image; option

-h 0.0.0.0is needed so ourhello_worldservice is available outside of the Docker containers.

Now, given the three files stored in a project directory:

project:

Dockerfile

hello_world.py

requirements.txt

we can build an image and test our service. Run the two following commands:

docker build -t hello_world_svc .

docker run -p 80:5000 hello_world_svc

If all goes well, you should be able to see output like:

* Serving Flask app "hello_world.py"

* Environment: production

...

* Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

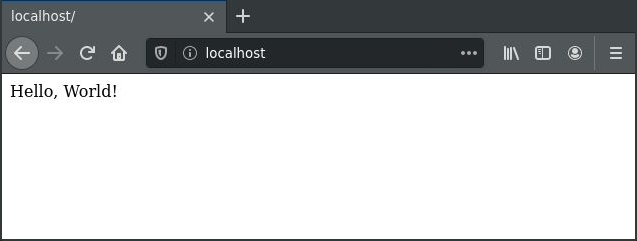

Then, you can use your web browser and navigate to address http://localhost to see the “Hello World” service running:

Note: in the browser we use address

http://localhostwith the default HTTP port,80, whilst the application reportsRunning on http://0.0.0.0:5000, that is on port5000. Mapping from port5000, as exposed by the container, to port80as seen by the host machine, is done with option-p 80:5000passed to thedocker runcommand above.

At this stage the first step is completed – we can build a Docker image with a simple web application. Next, we would like to push our precious containerized application to a git repository on AWS CodeCommit.

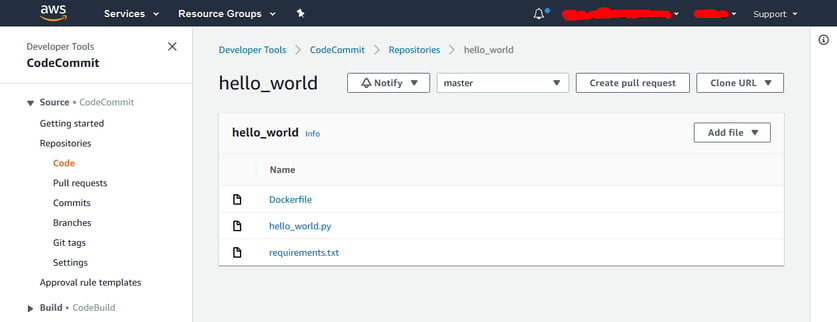

Code repository on CodeCommit

As mentioned at the beginning we are going to store our application in a git repository on AWS. For this we create an empty repository in AWS CodeCommit; follow Amazon documentation if unsure how to start.

Instead of CodeCommit it could be GitHub, GitLab or some other git repository service you wish. Importantly, our choice of the git service implies some specific behaviour later on when we will be looking at the build environment to tag Docker images. If you pick another source version control system, you need to double check ramifications which I highlight later.

Given an empty repository named hello_world, we first clone it to our local machine:

git clone https://git-codecommit.<YOUR_REGION>.amazonaws.com/v1/repos/hello_world

Note: the clone command above is just an example; you can copy yours from the AWS console.

Then, we copy existing code, i.e. three files: hello_world.py, requirements.txt and Dockerfile, to the hello_world directory. And commit and push to CodeCommit:

git add hello_world.py requirements.txt Dockerfile

git commit -m "Containerized Hello_world."

git push

We assume it is a relatively straightforward step, however, please follow CodeCommit documentation if for whatever reason things do not go smoothly.

In the end the contents of our repository should look like this:

We have a flat directory with the three files in it. All nice and easy.

Continuous deployment pipeline with CodePipeline

Up to this point we have created a simple http service, containerized it using Docker and stored the code in a git repository on AWS. Our next step is to achieve continuous deployment in reaction to changes in the code repository. In other words we would like to initiate the build and deployment processes whenever a change in the code is committed. For this purpose we use the CodePipeline service from AWS.

Just find CodePipeline in the services and click Create Pipeline. We follow all default settings, whilst mandatory fields and non-default values are listed below:

- name your pipeline, e.g.

hello_world_pipelineand click Next, - as a source provider select

AWS CodeCommit, then pick the repository namehello_worldand branchmaster; use recommended CloudWatch Events to react to changes in CodeCommit and click Next, - as a build provider use

AWS CodeBuildand click Create project to create a new build project.

Then, under Create build project do:

- name your build project, e.g.

hello_world_build. - pick latest Managed image with Operating system:

Amazon Linux 2 - pick Runtime:

Standard - pick latest Image:

aws/codebuild/amazonlinux2-x86_64-standard:3.0 - tick Privileged, since we want to build a Docker image

- select New service role and remember its name; you may rename it, e.g.

codebuild-hello_world-service-role - make sure you select Use a buildspec file.

- as Buildspec name enter:

aws-kube-cd/buildspec.yaml - click: Continue to CodePipeline to create the build project.

Once the build project is created it should be automatically picked by the CodePipeline Create new pipeline wizard, so you can click Next and Skip deploy stage. Finally, review the configuration and click Create pipeline.

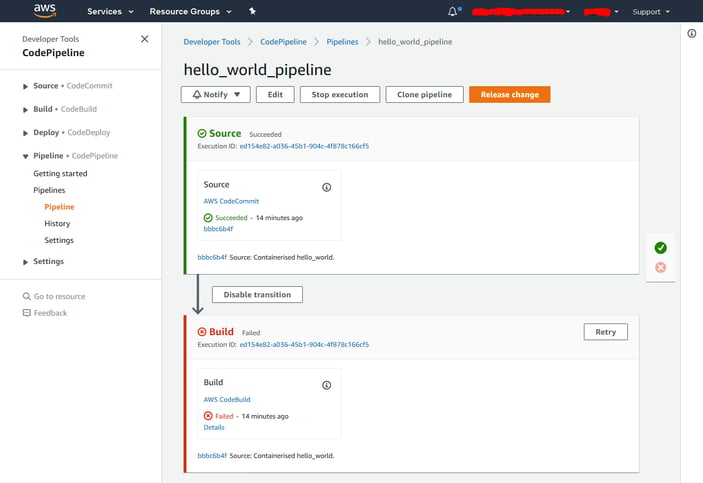

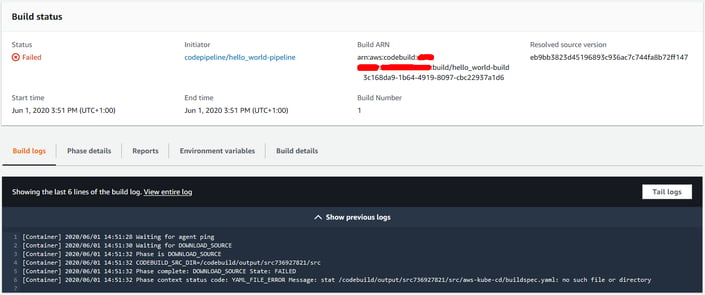

After a few moments, the pipeline will be created and triggered automatically as the new repository has been attached to it. As expected, however, the Build step is going to fail because we have not yet prepared the buildspec.yaml file (click Details to check the logs):

The detailed build logs shown in the console will look similar to this:

Notice the status code YAML_FILE_ERROR in the last line of the log.

Configuration of the CodeBuild project

With the empty skeleton of the pipeline the next step is to prepare a buildspec.yaml of the CodeBuild project. Our aim is to build a Docker image of the web application, store it in the ECR – the Docker image repository managed by AWS, and finally, to deploy the container onto the EKS cluster.

We start with a basic example of the buildspec file provided by the Amazon documentation.

buildspec.yaml:

version: 0.2

phases:

pre_build:

commands:

- echo Logging in to Amazon ECR...

- $(aws ecr get-login --no-include-email --region $AWS_DEFAULT_REGION)

build:

commands:

- echo Build started on `date`

- echo Building the Docker image...

- docker build -t $IMAGE_REPO_NAME:$IMAGE_TAG .

- docker tag $IMAGE_REPO_NAME:$IMAGE_TAG $AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com/$IMAGE_REPO_NAME:$IMAGE_TAG

post_build:

commands:

- echo Build completed on `date`

- echo Pushing the Docker image...

- docker push $AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com/$IMAGE_REPO_NAME:$IMAGE_TAG

Note: Remember to store the

buildspec.yamlfile in theaws-kube-cdsubdirectory.

Let us explain the key parts of this build specification. Firstly, in the pre_build phase the build process tries to log in to ECR to be able to store the Docker image which we intend to build later on. For this command to succeed we need to create an image repository in the region indicated by the AWS_DEFAULT_REGION environment variable (see next section).

Secondly, in the build phase the image is built much like we did it earlier from the command line. Here, however, two environment variables are used. The IMAGE_REPO_NAME variable must correspond to the repository in ECR we will create, whereas IMAGE_TAG is the version we want to tag the image with. Additionally, in this step the image is tagged with ECR prefix $AWS_ACCOUNT_ID.dkr.ecr... to indicate where it is going to be stored (this is how Docker allows user-defined image repositories to be used).

Finally, in the post_build phase the image built is pushed to the ECR repository.

Accessing the Elastic Container Registry

Since the above build project relies on ECR, our next task is to create a repository for our Docker images. It is a straightforward operation, so I leave it off this tutorial; for those of you who need a little guidance Amazon documentation is a good starting point. A few things are important, though:

- Make sure to create the repository in the same AWS region where you created your build project; in the example above it is represented by the

AWS_DEFAULT_REGIONenvironment variable. - Store the repository name in the

IMAGE_REPO_NAMEenvironment variable. At the same time set theAWS_ACCOUNT_IDvariable. - Add relevant permissions to the CodeBuild service role we created earlier.

Ad 1. If the region of the CodeBuild project is the same as the region in which repository is created, the first step does not require your attention. The AWS_DEFAULT_REGION variable is already set in the build environment.

Ad 2. To set environment variables go to your CodeBuild project and click Edit button in the top section. Then, select Environment and unfold the Additional configuration section where you will find a place to add new environment variables. Set one like: Name: IMAGE_REPO_NAME, Value: hello_world_repo, and the other: Name: AWS_ACCOUNT_ID, Value: <YOUR_12_DIGIT_ACCOUNT_ID>. Once you set the variables, click Update environment.

Ad 3. To add permissions to the CodeBuild service role, go to the IAM management console and select the role you created earlier (during the creation of the CodePipeline/CodeBuild projects, e.g. codebuild-hello_world-service-role). Then on the Permissions tab you will find a CodeBuild policy which we need to edit. Select the policy, click Edit policy and then select the JSON tab. In the editor add the following permissions to the Statements array:

{

"Effect": "Allow",

"Action": [

"ecr:BatchCheckLayerAvailability",

"ecr:CompleteLayerUpload",

"ecr:GetAuthorizationToken",

"ecr:InitiateLayerUpload",

"ecr:PutImage",

"ecr:UploadLayerPart"

],

"Resource": "*"

},

Then, click Review policy, check that Elastic Container Registry is on the list of services with the Read, Write access level, and Save changes. Otherwise, please review this step or carefully follow Amazon documentation.

Now, we need to commit the buildspec.yaml file in our repository.

git add aws-kube-cd/buildspec.yaml

git commit -m "Added initial buildspec."

git push

If the above steps are all done correctly, the pipeline will be triggered automatically after the git push command completes. And, now, you should see the CodeBuild project going past the pre_build phase but failing at the following build phase. It is because we have not set the IMAGE_TAG environment variable, and so the build project fails on command:

- docker build -t $IMAGE_REPO_NAME:$IMAGE_TAG .

Therefore, our next task is to take care of tagging images.

Tagging Docker images

Various practices of tagging Docker images exist, including Semantic Versioning and using code commit id, and you may decide which is most suitable. In this post, however, to tag a Docker image we will use either the tag of the git repository, or if the commit has not been tagged, an abbreviation of the git commit id. In that way we will preserve the link back to the commit of which the image is built whilst, in case the commit was tagged, benefit from meaningful semantic version numbers.

Of course the proposed approach will need to be customised if you envisage multiple deployment environments, like development, staging and production, but this is out of scope of this blog post.

To summarise, our aim is to find out whether or not our pipeline was triggered by a tagged commit in the code repository and then react accordingly:

- If the pipeline was triggered by a tagged commit, we want to tag the Docker image using the tag name; note that by convention code is tagged with prefix

v, e.g.v1.0.5whereas Docker images are tagged without the prefix, i.e.1.0.5. - Otherwise, we want to tag the Docker image using the git commit id. This will ensure that the lastest code changes on the master branch can be safely deployed to the cluster.

To address both these points we will need to write a simple helper script. The key problem for us is to get the latest tag name of the git repository.

Looking through the variables available in the build environment, we will not find the relevant information. The CODEBUILD_SOURCE_REPO_URL variable is for us, who use CodeCommit, empty unfortunately. The CODEBUILD_SOURCE_VERSION and CODEBUILD_RESOLVED_SOURCE_VERSION variable for CodeCommit include the commit id or branch name associated with the source code to be built. We will use one of them to name images of untagged commits. However, as our need is rather specific, none of the existing variables includes the code tag information and we need to retrieve it ourselves. Note also that if you decided to use git repository other than CodeCommit, the environment variables may be set differently, so please check the documentation carefully.

Following Timothy Jones’ blog post about how to access git metadata, we will need to clone the source code repository and use the git command line tool to read the most recent tag on the branch. The idea is that if the current commit (cf. CODEBUILD_RESOLVED_SOURCE_VERSION) has been tagged by the user, we want to tag the Docker image with the corresponding version number. Otherwise, we just tag it with an abbreviation of the commit id.

The code below shows one way to implement it, whilst the complete code you can find in separate script aws-kube-cd/get-image-tag.sh in our repository.

TEMP_DIR="$(mktemp -d)"

git clone "$REPO_URL" "$TEMP_DIR"

cd "$TEMP_DIR"

git fetch --tags

TAG_NAME=$(git tag --points-at "$COMMIT_ID")

if [[ $TAG_NAME == v* ]]; then

echo $TAG_NAME | cut -c 2-

else

echo $COMMIT_ID | cut -c 1-10

fi

Note that the script needs two input parameters: REPO_URL and COMMIT_ID. The former we set as an environment variable in the CodeBuild project, whereas the latter is already available in the build environment as CODEBUILD_RESOLVED_SOURCE_VERSION). At this stage our updated buildspec.yaml needs a few more commands:

...

phases:

build:

commands:

...

- echo Tagging the new image...

- IMAGE_BASEID="$AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com/$IMAGE_REPO_NAME"

- echo Image base-id = $IMAGE_BASEID

- chmod u+x ./aws-kube-cd/get-image-tag.sh

- IMAGE_TAG="$(./aws-kube-cd/get-image-tag.sh $SOURCE_REPO_URL $CODEBUILD_RESOLVED_SOURCE_VERSION)"

- docker tag $IMAGE_REPO_NAME $IMAGE_BASEID

- docker tag $IMAGE_REPO_NAME $IMAGE_BASEID:$IMAGE_TAG

post_build:

commands:

...

- docker push $IMAGE_BASEID

- docker push $IMAGE_BASEID:$IMAGE_TAG

We added helper variable IMAGE_BASEID to store the root of the Docker image id. Then, we retrieve the image tag from the cloned git repository and store it in the IMAGE_TAG variable. However, for the get_image_tag.sh command to work, we have to set environment variable SOURCE_REPO_URL in our CodeBuild project. Simply, set it to the HTTPS clone URL of your hello_world code repository in CodeCommit.

Next, using the base id and image tag variables we can tag the docker image. The first tag command, docker tag \$IMAGE_REPO_NAME \$IMAGE_BASEID, tags the Docker image with name latest. This is default Docker behaviour if no explicit tag name is given which allows an easy access to the latest version of the docker image. The second tag command tags the image using the desired tag name.

Once tagged we push the image to ECR. The first command will do all the heavy lifting, whilst the second will just mark existing image with an additional alias. The effect is that if you annotate your code with tag v1.0 our CodeBuild project will build, tag and push the Docker image to ECR tagged as 1.0. Otherwise, it will be tagged with the first ten characters of the commit id. Additionally, the image will be available as latest.

Note: for the code to work properly you need to tag your source code before pushing it to CodeCommit. This is because the pipeline is triggered by commit changes only. If you first push untagged code and then tag it later, the CodePipeline will detect the untagged change, tag the resulting Docker image using commit id, whilst the following tagging event will remain unnoticed. And, unfortunately, the source code tag will not be picked up by any of the following commits because it is attached to the commit that initiated previous build.

Finally, there are two more little additions needed:

- allow our CodeBuild role to pull from CodeCommit by adding

GitPullpermission to the role (see previous section if unsure how to add permissions):

{

"Effect": "Allow",

"Action": "codecommit:GitPull",

"Resource": "arn:aws:codecommit:*:*:<YOUR_REPOSITORY_NAME>"

},

Note: you need to change

<YOUR_REPOSITORY_NAME>to the actual CodeCommit repository name; in our case it ishello_world.

- enable git-credential-helper in the

buildspec.yaml, so that the build environment can clone the repository without the need to pass credentials on the command line:

version: 0.2

env:

git-credential-helper: yes

At this stage the complete buildspec file after some refactoring should look like:

buildspec.yaml:

version: 0.2

env:

git-credential-helper: yes

phases:

pre_build:

commands:

- echo Logging in to Amazon ECR...

- $(aws ecr get-login --no-include-email --region $AWS_DEFAULT_REGION)

build:

commands:

- echo Build started on `date`

- echo Building the Docker image...

- docker build -t $IMAGE_REPO_NAME .

- echo Tagging the new image...

- IMAGE_BASEID="$AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com/$IMAGE_REPO_NAME"

- echo Image base-id = $IMAGE_BASEID

- chmod u+x ./aws-kube-cd/get-image-tag.sh

- IMAGE_TAG="$(./aws-kube-cd/get-image-tag.sh $SOURCE_REPO_URL $CODEBUILD_RESOLVED_SOURCE_VERSION)"

- docker tag $IMAGE_REPO_NAME $IMAGE_BASEID

- docker tag $IMAGE_REPO_NAME $IMAGE_BASEID:$IMAGE_TAG

- echo Pushing the Docker image...

- docker push $IMAGE_BASEID

- docker push $IMAGE_BASEID:$IMAGE_TAG

post_build:

commands:

- echo Build completed on `date`

The build specification needs also the get-image-tag.sh script to run. Store it in the aws-kube-cd subdirectory and commit the changes:

git add aws-kube-cd/buildspec.yaml aws-kube-cd/get-image-tag.sh

git commit -m "Improved image tagging."

git push

The code change above triggers the CodePipeline and CodeBuild projects. The build project should complete successfully. It should create a fresh Docker image, tag it as latest and with the abbreviation of commit id, and push it to ECR. You can check your ECR repository for the new Docker image stored.

Our next, and final, step is to deploy this fresh Docker image to the EKS cluster (for production or staging purposes).

Deployment to an EKS cluster

As we want to automatically deploy our hello_world application to an EKS cluster, the final step is to create the cluster and enable our CodeBuild project to deploy the application onto it.

Creating a cluster and NodeGroup

If you haven’t got an EKS cluster yet, please follow Amazon documentation to create one. You may also need to follow Installing kubectl documentation if you haven’t got the kubectl tool installed. Once you have created a cluster and installed tools, our assumption is that on your local machine you can successfully run command kubectl get svc and the output looks similar to this:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.100.0.1 <none> 443/TCP ???

Note: When creating a new cluster remember the name you used; e.g.

hello-world-eks-cluster. Otherwise, if using an existing cluster also make note of its name.

Additionally, you will need to create a node group with a couple of worker nodes such that command kubectl get nodes shows at least one node with the Ready status:

NAME STATUS ROLES AGE VERSION

ip-???.???.compute.internal Ready <none> ??? v1.16.8-eks-e16311

Note: When creating the node group, use at least

smallinstance size. You won’t be able to deploy a container to amicroinstance.

Deploying from localhost

Deployment to kubernetes is a whole new area of knowledge and skills, so in this post we again limit the exercise to a very simple scenario. We would like to deploy an instance of our “Hello World” application and make it publicly available. For this to work we will create two descriptors: the application deployment and a load balancer service. The load balancer will direct public traffic at the backend application service pods on the worker nodes.

Firstly, following Kubernetes documentation, we create a hello world deployment descriptor, aws-kube-cd/hello-world-deployment.yaml:

hello-world-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-world-deployment

labels:

app: hello-world-app

spec:

replicas: 1

selector:

matchLabels:

app: hello-world-app

template:

metadata:

labels:

app: hello-world-app

spec:

containers:

- name: hello-world-app

image: <YOUR_IMAGE_BASEID>

ports:

- containerPort: 5000

Note: Remember to change

<YOUR_IMAGE_BASEID>to the actual value as set in the CodeBuild project. You can check ECR or the latest build details looking for theImage base-idin the logs. However, if you are copying Image URI from ECR, remove the image tag such that image base id ends with/hello_world

Then, we create a simple load balancer service descriptor and store it in aws-kube-cd subdirectory:

hello-world-lbservice.yaml:

apiVersion: v1

kind: Service

metadata:

name: hello-world-svc

spec:

selector:

app: hello-world-app

type: LoadBalancer

ports:

- protocol: TCP

port: 80

targetPort: 5000

In the above descriptor we indicate:

- which application the load balancer will direct traffic to:

.spec.selector.app: hello-world-app. - which port it will accept the traffic on:

.spec.ports.port: 80, and - which port it will pass the traffic to:

.spec.ports.targetPort: 5000.

Given the two files above, you can run deployment from your local machine:

kubectl apply -f aws-kube-cd/hello-world-deployment.yaml

kubectl apply -f aws-kube-cd/hello-world-lbservice.yaml

and check if your deployment is ready:

kubectl get deployments

If successful, you should see output like:

NAME READY UP-TO-DATE AVAILABLE AGE

hello-world-deployment 1/1 1 1 2m28s

Then, we can check the status of the load balancer and see its external address. By getting the list of Kubernetes services:

kubectl get svc

you should see two entries, like:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-world-svc LoadBalancer 10.100.133.184 ???.elb.amazonaws.com 80:32671/TCP ???

kubernetes ClusterIP 10.100.0.1 <none> 443/TCP ???

Use the EXERNAL-IP of the LoadBalancer service in your web browser and see the “Hello World” service running in the cloud. The output should look like earlier in section Application: Hello World.

We are getting close… ;-)

Intergating deployment into the CodeBuild project

Up to now our CodePipeline project reacts to changes in the source code, whilst the CodeBuild project builds and pushes a new docker image to ECR. It is missing, however, the final deployment step. Thus, what we need to focus on is to let the CodeBuild project run kubectl deployment scripts.

To do so we have to make some changes in our buildspec.yaml file. First, we need to run aws eks update-kubeconfig operation to create a Kubernetes configuration file for us. Note that every single build of a CodeBuild project is executed on a separate, clean VM instance. Adding line:

- aws eks --region $AWS_DEFAULT_REGION update-kubeconfig --name hello-world-eks-cluster

should do what we need. An important detail, however, is user permissions.

Configuring aws CLI tool

It is important to note that CodeBuild instance runs under a role which is dynamically created every time the project is executed. You can easily find this out by adding the following line to the buildspec.yaml:

...

phases:

pre_build:

commands:

- aws sts get-caller-identity

...

Then, in the logs of a CodeBuild execution you will see a different identity for each run.

Note: to re-execute the CodePipeline project without changing the source code, go to the CodePipeline service console, select our

hello_worldpipeline and click Release change. Then, you can check Details to see the logs from the CodeBuild service.

Unfortunately, having a different role for every run of the build project makes the integration with the Kubernetes infrastructure a little tricky. Usually, we would add the role to the Kubernetes ConfigMap and let it access the cluster. But since it is a dynamic role, created for the purpose of a single build, we are unable to do it.

Instead, we will use account access keys to configure the aws CLI tool, and in this way let the CodeBuild VM get access to the cluster. If you are unsure what account access keys are, please read Amazon documentation. With basic understanding, simply go to My Security Credentials panel in the AWS console and click Create access keys.

Now, given a pair of keys associated with your user, all we need to do is to define two environment variables: AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY recognised by the aws CLI. They contain sensitive information, so we will follow best practices and use AWS System Manager Parameter Store and SecureString to pass them through to a build instance.

In the buildspec.yaml go to the env section and add two parameter store variables:

version: 0.2

env:

parameter-store:

AWS_ACCESS_KEY_ID: "/Test/HelloWorld/access-key-id"

AWS_SECRET_ACCESS_KEY: "/Test/HelloWorld/access-key-secret"

git-credential-helper: yes

...

The actual value of key paths /Test/HelloWorld/access-key-id and /Test/HelloWorld/access-key-secret is not that important. It simply must be consistent with the value you define in the Parameter Store.

Using console, go to the AWS Systems Manager, select Parameter Store and create the two parameters. Make sure you set their name as specified in the buildspec.yaml file. Then, pick type SecureString, leave the other options as default but set Value to the actual value of the access and secret access keys you created earlier.

Additionally, we need to let the build role access the Parameter Store. Again, find the CodeBuild role associated with our build project in the IAM console. Edit policy and add the following statement:

{

"Effect": "Allow",

"Action": "ssm:GetParameters",

"Resource": "arn:aws:ssm:<REGION>:<ACCOUNT-ID>:parameter/Test/HelloWorld/*"

},

Note: remember to change

<REGION>and<ACCOUNT-ID>to the actual region and your 12-digit account id values.

That is all for the aws CLI tool. If you commit buildspec.yaml and push it to the repository, CodePipeline will pick up the change and in the CodeBuild logs you will see aws sts get-caller-identity showing your own account details like:

{

"UserId": "<YOUR_AWS_ACCESS_KEY_ID>",

"Account": "XXXXXXXXXXXX",

"Arn": "arn:aws:iam::XXXXXXXXXXXX:user/???"

}

If all is configured correctly, the above output should match the output of this command executed on your local machine. If it does not, please go through this section again. If it does, the aws tool is configured correctly and we can add to buildspec.yaml the aws eks update-kubeconfig command shown before. Now, we are ready to start using kubectl in the buildspec.yaml.

Using kubectl to deploy the application

Remember the two descriptors prepared earlier in section Deploying from localhost? With aws configured we could add them to buildspec.yaml just after the aws command and use them to deploy our application. There is, however, one small detail we need to iron out beforehand.

The image id we used in the hello-world-deployment.yaml descriptor referred to IMAGE_BASEID, with no image tag, which implicitly indicates the latest Docker image available. Unfortunately, when our deployment is already running and we want to update the Docker image, simple kubectl apply command will not be enough to force Kubernetes to refresh the application pods. The descriptor referring to the latest image does not change, and so kubectl assumes that nothing has changed and ignores the apply -f hello-world-deployment.yaml operation.

Of various ways we could force the refresh, we will use one which can deploy new application, refresh one that is running already and, most of all, keep deployment resilient to multiple parallel changes in the code repository. The idea is to set the docker image in the deployment descriptor with explicit id and tag names.

Let us copy the hello-world-deployment.yaml into a new file and change it slightly:

hello-world-deployment-template.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

...

spec:

...

template:

...

spec:

containers:

- name: hello-world-app

image: DOCKER_IMAGE_ID

...

Note: this time as image id write exactly string

DOCKER_IMAGE_ID

String DOCKER_IMAGE_ID will serve as a placeholder for the actual image id value we will insert in the descriptor. The value we already have in the buildspec.yaml stored in the IMAGE_TAG variable. Then, the relevant part of our buildspec.yaml looks like:

phases:

...

build:

commands:

...

- echo Pushing the Docker image to ECR...

- docker push $IMAGE_BASEID

- docker push $IMAGE_BASEID:$IMAGE_TAG

- echo Build completed on $(date)

post_build:

commands:

- echo Deployment to your EKS cluster started on $(date)

- echo Image id = $IMAGE_BASEID:$IMAGE_TAG

- aws eks --region $AWS_DEFAULT_REGION update-kubeconfig --name hello-world-eks-cluster

- sed s=DOCKER_IMAGE_ID=$IMAGE_BASEID:$IMAGE_TAG= aws-kube-cd/hello-world-deployment-template.yaml | kubectl apply -f -

- kubectl apply -f aws-kube-cd/hello-world-service.yaml

- echo Deployment completed on $(date)

- kubectl get svc hello-world-svc

To substitute the placeholder string we use sed and pass its output to the kubectl apply -f - command. Since the build project is triggered by a change in the code repositery, every time it is run a different IMAGE_TAG is set, and so Kubernetes can notice the change. Thus, the apply command either creates a new deployment if it does not exist yet, or updates existing one.

Additionally, the last line in the buildspec.yaml shows the LoadBalancer public address, which we can use to access our HelloWorld application. All done!

Now, it is time to test it in action. Add the descriptor file with git add, commit the last few changes in the buildspec.yaml and push all to CodeCommit. The effect will be an updated deployment of our “Hello World” application.

Summary

In this post we presented an end-to-end example of continuous deployment of a simple Web application to a Kubernetes cluster running in an Amazon EKS cluster. Every time you commit and push a change to the source code repository a new Docker image is build and deployed in the cluster. For example, the following sequence:

git commit -m "Some change in the source code."

git push

will result in a new Docker image being built, tagged as an abbreviation of the commit id, pushed to the repository and deployed in the cluster.

Moreover, if you tag a change before pushing, the Docker image will be tagged accordingly. The following sequence:

git commit -m "Some other change in the source code."

git tag v1.0.1

git push --tags

git push

will result in a Docker image being built, tagged 1.0.1 and pushed to ECR and deployed in the cluster.

Contrary to other solutions which you can find online, ours does not use any extra VMs, containers or Lambda functions to complete the deployment. It simply re-uses a CodeBuild instance to complete all the steps.

Of particular interest might be the way we tag Docker images and use the tag to change the Kubernetes deployment descriptor. Therefore, our solution can deploy a new application as well as update one already running. Additionally, reading the tag of the git source code repository makes it relatively straightforward to adapt our CodeBuild project to handle multiple deployment environments like testing, staging and production.

All the code related to this post is available in our code repository. Enjoy!